|

Jingxiang Guo | 郭京翔 I am a Master of Computing (Artificial Intelligence) student at the National University of Singapore. I have research collaborations with NUS CLeAR Lab (advised by Prof. Harold Soh), SJTU ScaleLab (advised by Prof. Yao Mu), NUS LinS Lab (advised by Prof. Lin Shao) and HITsz RLGroup (advised by Prof. Yanjie Li). I received my B.Eng. in Automation from Harbin Institute of Technology, Shenzhen. Email / CV / GitHub / Google Scholar / More Academic Links / WeChat |

|

News

|

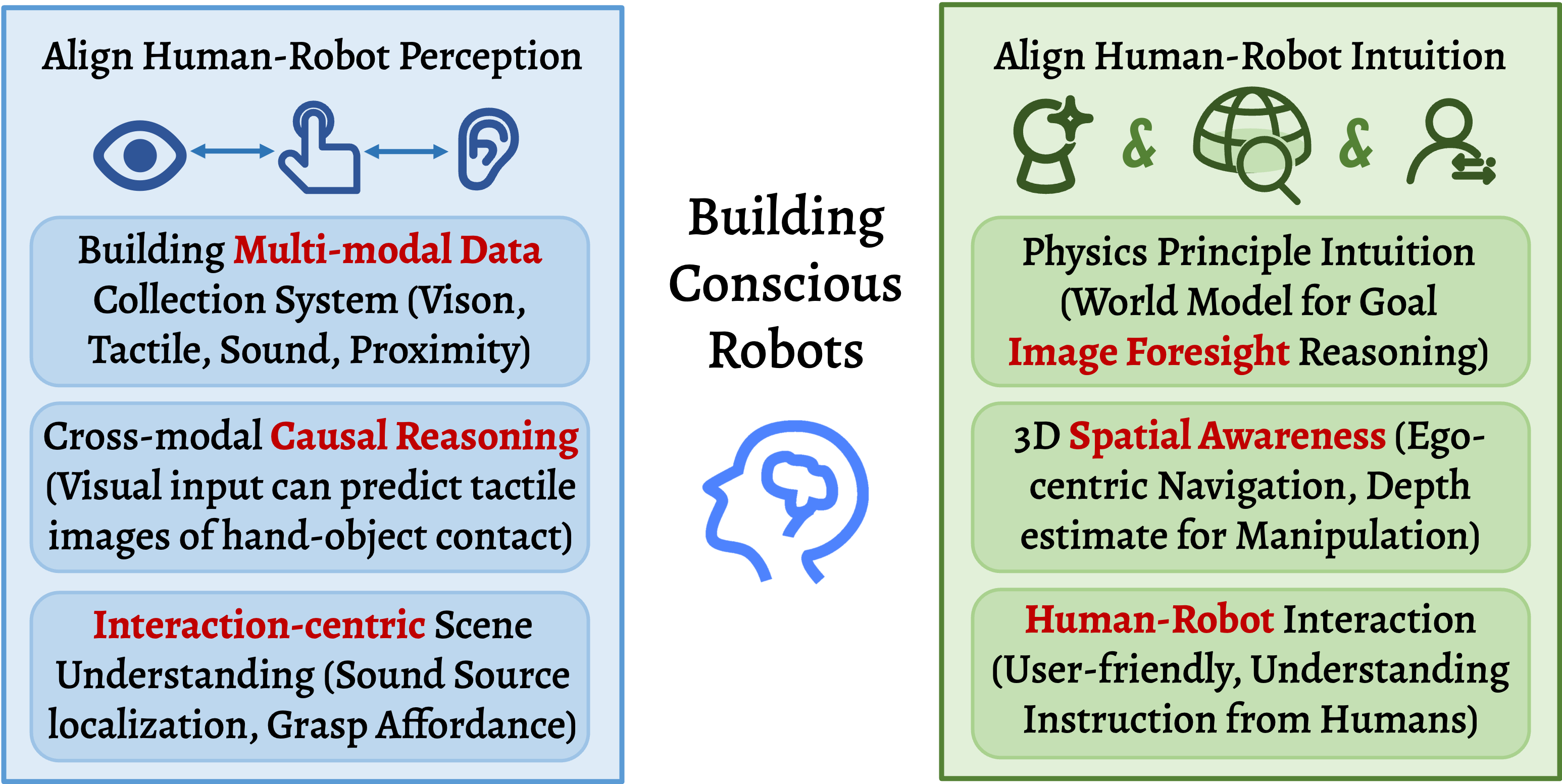

Research

My research interests lie in 🤖 robot multi-modal learning, 🦾 dexterous manipulation, and 🤝 human-robot interaction.

My long-term goal is to create truly conscious robotic life, pushing the boundaries of what's possible with machines.

|

Research Proposal 🔗

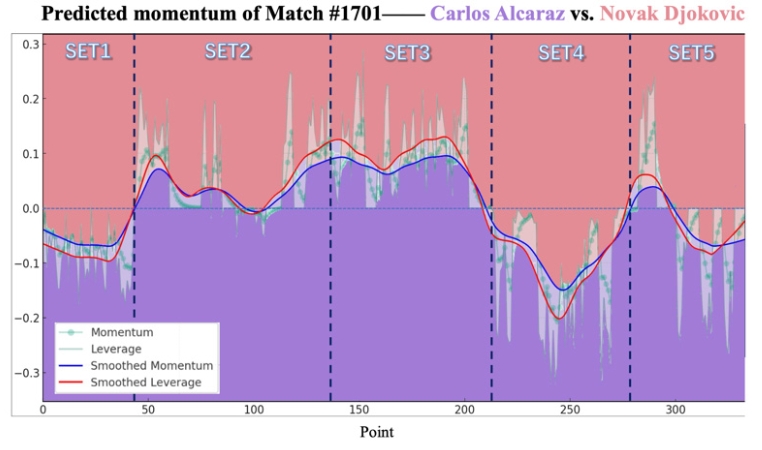

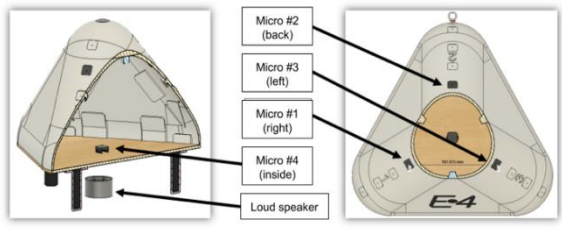

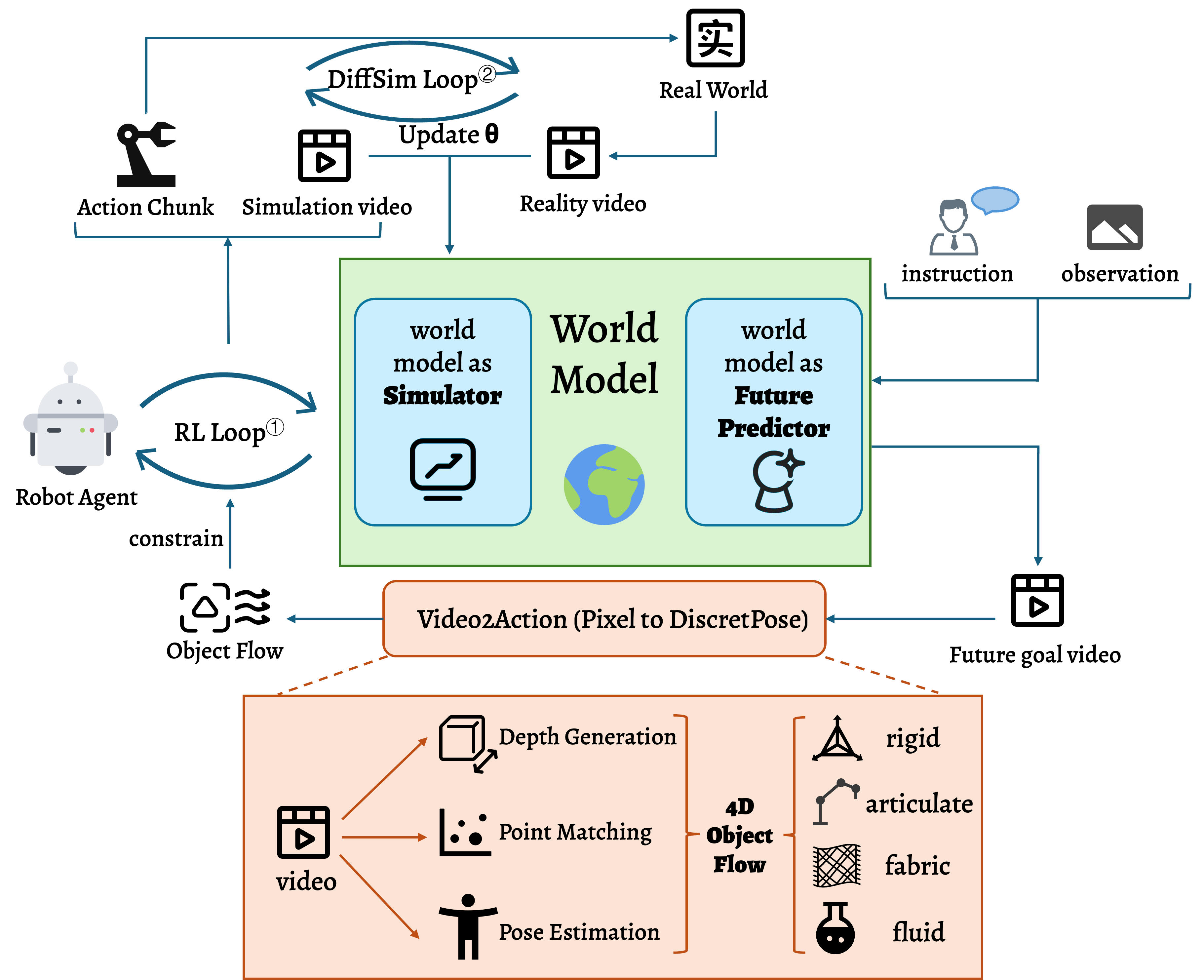

Research Map

World Model Framework

|

Selected PublicationsPapers sorted by recency. |

|

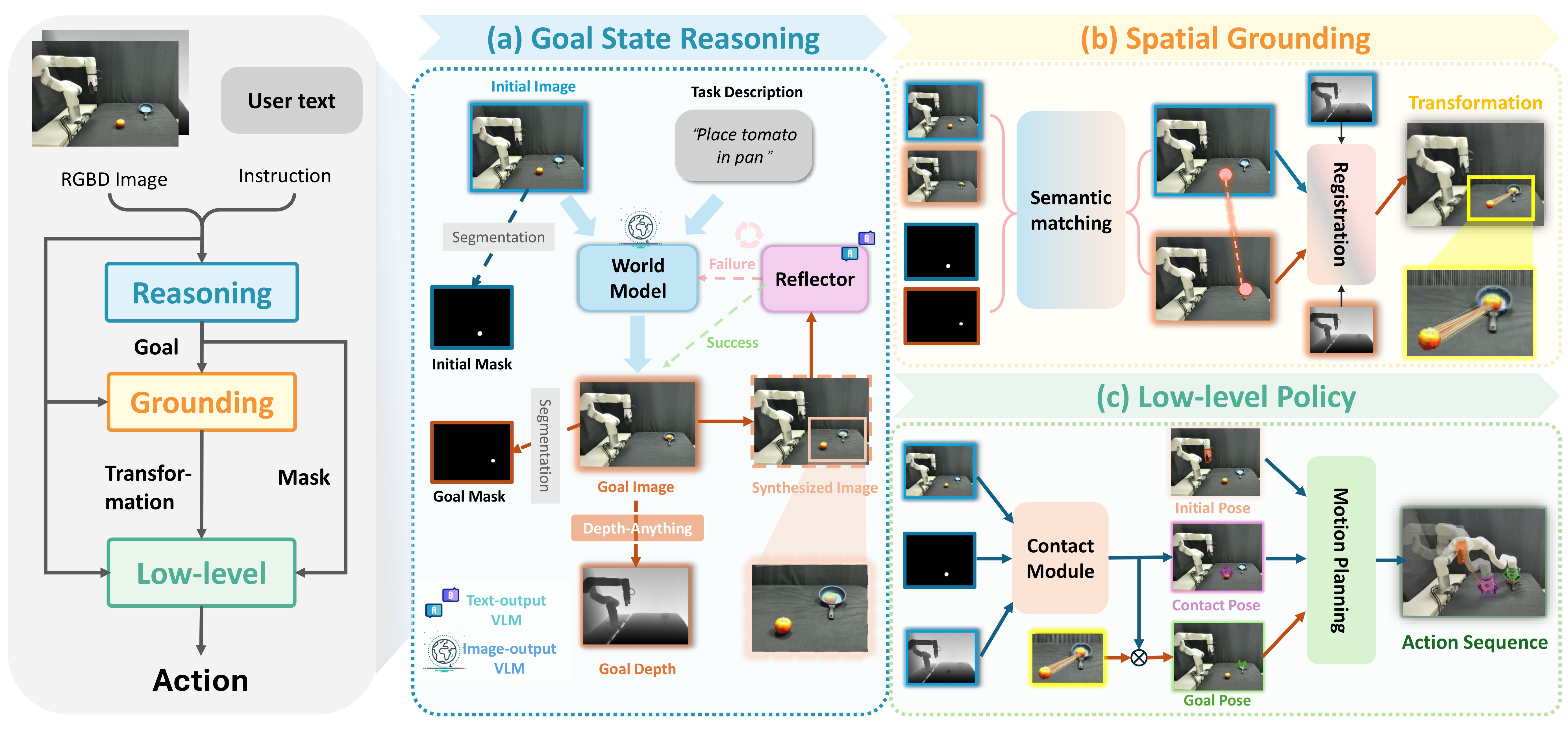

Goal-VLA: Image-Generative VLMs as Object-Centric World Models Empowering Zero-shot Robot Manipulation

, , , , , , , , , Website / arXiv / Code Poster Presentation, IROS 2025 @ Human-in-the-loop Robot Learning

TL;DR:

A zero-shot framework that leverages Image-Generative VLMs as world models to generate desired goal states for generalizable manipulation, using object state representation as the interface between high-level and low-level policies.

|

|

TelePreview: A User-Friendly Teleoperation System with Virtual Arm Assistance for Enhanced Effectiveness

*, *, *, , , , , Website / arXiv / Code (Coming soon)

Best Paper Award, ICRA 2025

@ Human-Centric Multilateral Teleoperation

Spotlight Presentation, ICRA 2025 @ Human-Centric Multilateral Teleoperation

TL;DR:

Implement a low-cost teleoperation system utilizing data gloves and IMU sensors, paired with an assistant

module that improves data collection process by visualizing future robot operations through visual previews.

|

|

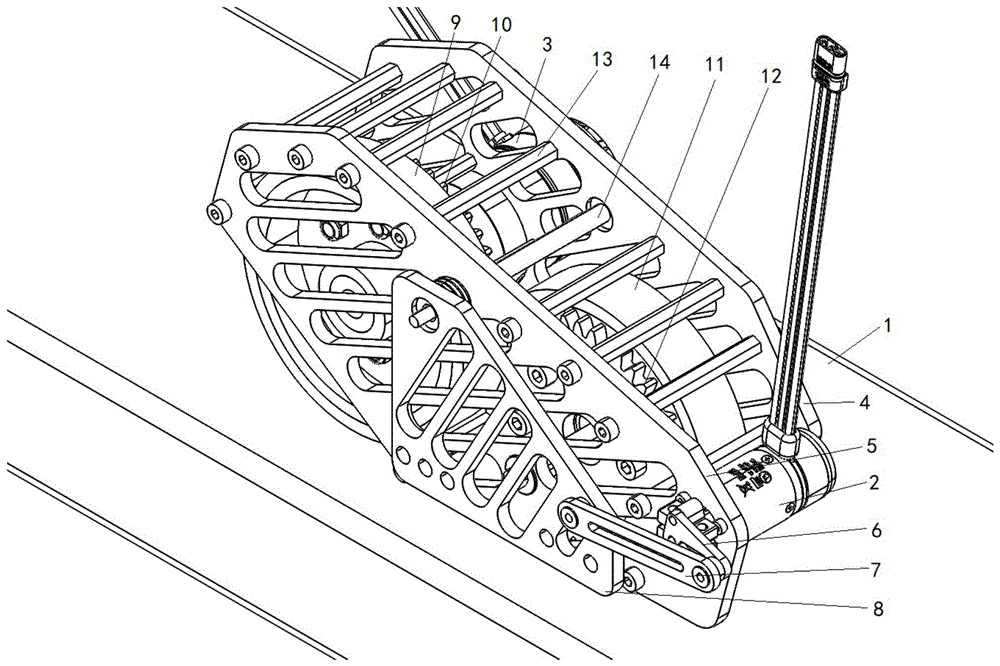

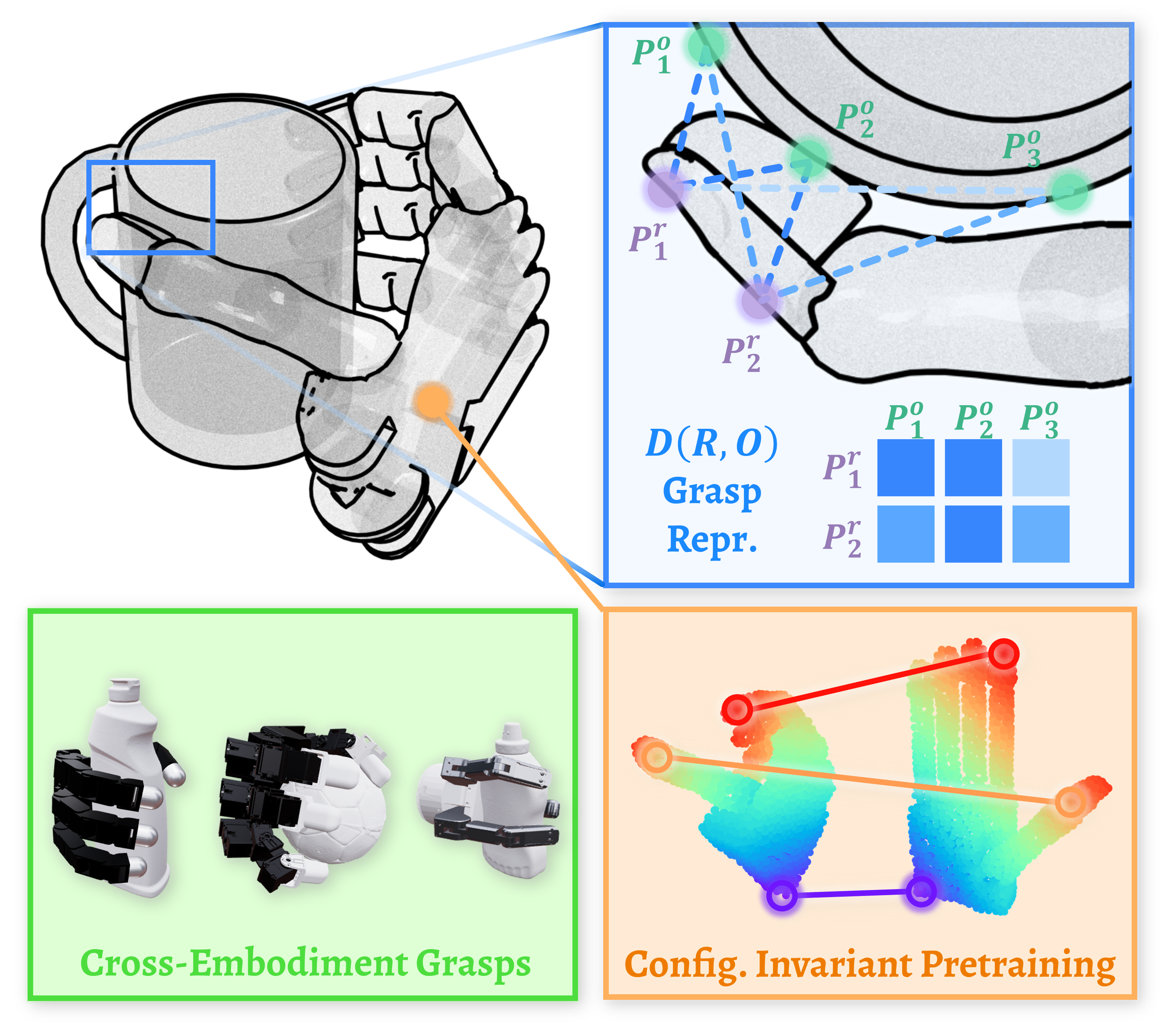

$\mathcal{D(R,O)}$ Grasp: A Unified Representation of Robot and Object Interaction for Cross-Embodiment Dexterous Grasping

*, *, , , , , , Website / arXiv / Code / Media (机器之心)

ICRA 2025

International Conference on Robotics and Automation

ICRA 2025 Best Paper Award on Robot Manipulation and Locomotion Best Robotics Paper Award, CoRL 2024 @ MAPoDeL Oral Presentation, CoRL 2024 @ MAPoDeL Spotlight Presentation, CoRL 2024 @ LFDM

TL;DR:

Introduce $\mathcal{D(R,O)}$, a novel interaction-centric representation for dexterous grasping tasks that

goes beyond traditional robot-centric and object-centric approaches, enabling robust generalization across

diverse robotic hands and objects.

|

|

|

Award

|

Education |

|

National University of Singapore, Singapore 2025.08 - PresentMaster of Computing (Artificial Intelligence)Dissertation: Tactile Representation Learning for Heterogeneous Tactile Sensors Certificate |

|

Harbin Institute of Technology, Shenzhen, China 2021.09 - 2025.07B.E. in AutomationGPA: 3.7/4.0 Certificate |

|

National University of Singapore, Singapore 2024.07 - 2025.05NGNE Program Exchange StudentGPA: 4.2/5.0 Certificate / Co-Curricular Activity Record |

Experience |

|

Collaborative, Learning, and Adaptive Robots Lab (CLeAR Lab), Singapore 2024.08 - PresentResearch InternAdvisor: Prof. Harold Soh |

|

Spatial Cognition and Robotic Automative Learning Laboratory (ScaleLab) 2024.05 - 2024.08Research InternAdvisor: Prof. Yao Mu |

|

NUS Learning and Intelligent Systems Lab (LinS Lab), Singapore 2024.07 - 2025.05Research InternAdvisor: Prof. Lin Shao |

|

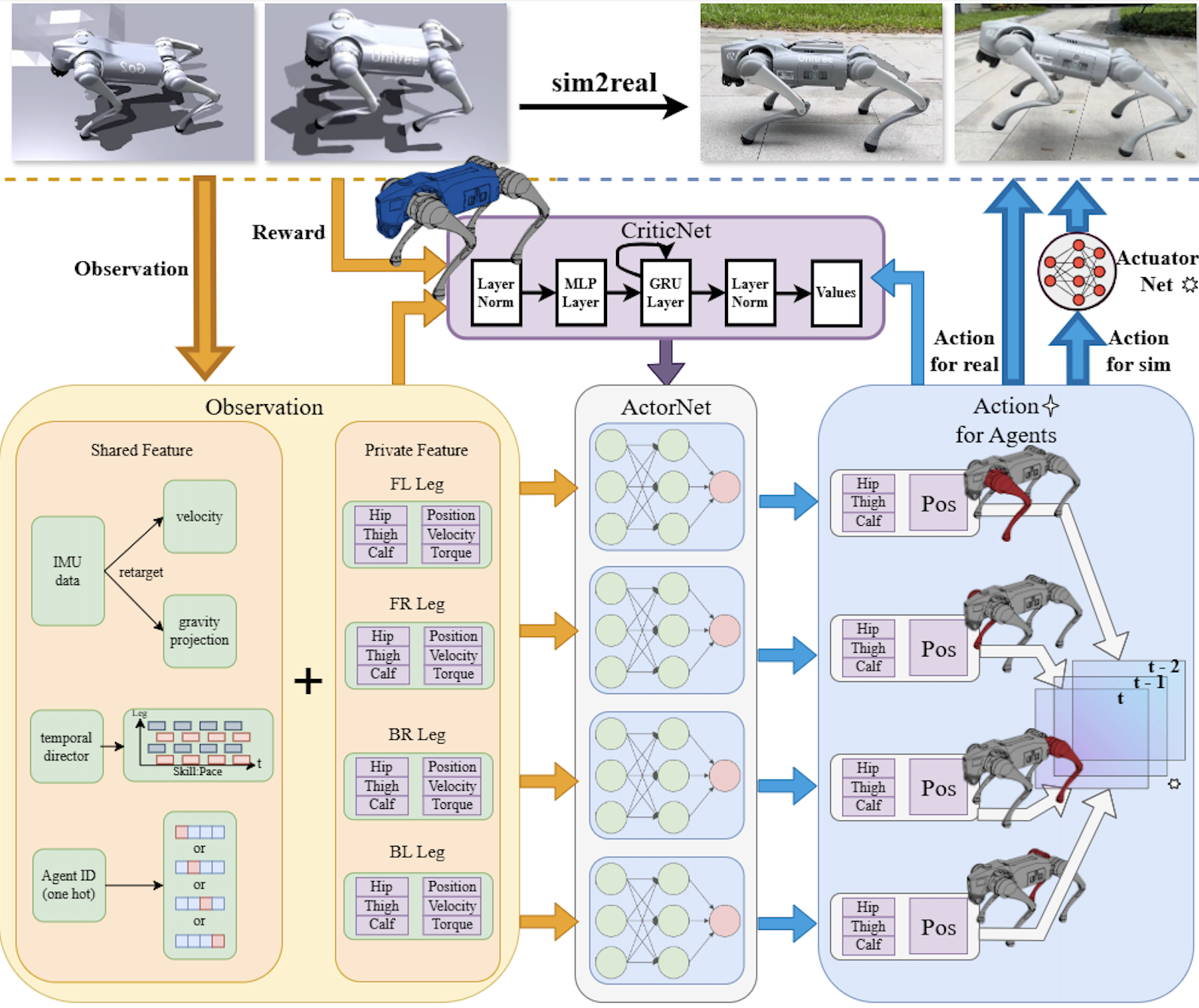

HTISZ Reinforcement Learning Group (RLG), Shenzhen, China 2022.10 - 2024.06Research InternAdvisor: Prof. Yanjie Li |

Employment |

|

Tencent, Singapore 2025.12 - 2026.04Data Scientist InternMentor: Kun Ouyang Internship Certificate |

|

Autolife Robotics, Singapore 2025.08 - 2025.11Robot Software System InternMentor: Siwei Chen Internship Certificate |

|

Horizon Robotics, Shanghai, China 2025.06 - 2025.08Cloud Platform InternMentor: Yusen Qin Internship Certificate |

Talks

|

Organization

|

VapourXMember and Co-Founder2025.08 - Present Founder: Congsheng Xu Website |

|

CoARA Working Group ERIPMember2025.4 - Present Website |

|

|

UNU Global AI NetworkMember2024.04 - Present Website |

|

The Millennium ProjectIntern2024.03 - 2024.09 Mentor: Jerome C. Glenn Website / Internship Certificate |

Entrepreneurship

|

Fluxia.AICo-Founder2025.12 - Present CEO: Jiaming Wang |

|

XSpark AI (无界智航)Co-Founder2025.10 - Present CEO: Shilong Mu Website |

|

Somnia Lab (梦伴实验室)Co-Founder2025.10 - Present Website / Videos |

|

RoboScience (机科未来)Early Collaborator2024.10 - 2025.10 CEO: Ye Tian Website |

|

Thanks for your visiting😊! Feel free to contact me if you have any problems.

|